How many emails do you need to trigger from the server every day? How many of them are just single row query result? Well, I have to send a lot html formatted, single row query result to clients. Not funny at all. So if you are doing the same thing, today's recipe could save you a bit.

You don't need to read this post line by line, to save your time, below is the definition. If you are an expert in dynamic query, you should know what I am doing fairly quick. In case my sp is too complicated, I put my notes after the procedure.

Create Procedure [dbo].[sp_SendVerticalHTMLEmail]

@Query nvarchar(max),

@CSSStyle nvarchar(max),

@EmailTo nvarchar(500),

@EmailCc nvarchar(500),

@EmailSubjct nvarchar(255),

@EmailAttachments nvarchar(max)

as

BEGIN

SET NOCOUNT ON

declare @id int = 0

BEGIN TRY

declare @EmailBody nvarchar(max) = '', @cmd nvarchar(max) = ''

IF @CSSStyle = '' OR @CSSStyle is null

SET @CSSStyle = 'table {border-collapse: collapse; font-size:90%}table, td, th {border: 1px solid black;}td.header {font-weight: bold}'

select @cmd += 'IF object_id (''tempdb.dbo.#Tmp'') IS NOT NULL DROP Table tempdb.dbo.#Tmp;'+ CHAR(10) + char(13)

select @cmd += 'select * INTO #Tmp FROM ( ' + @query + ') tmp;' + CHAR(10) + char(13)

select @cmd +='declare @tmpSql nvarchar(max) = ''''

select @tmpSql +=

''SELECT '''''' + name + '''''' as R1, (select CAST('' + QUOTENAME(CAST(name as varchar(255)), ''[]'') + '' as varchar(1000)) from #tmp) as R2 UNION ALL '' + CHAR(10) + char(13)

from tempdb.sys.columns where object_id = object_id(''tempdb.dbo.#Tmp'');

select @tmpSql = left(@tmpSql, len(@tmpSql) - 12);

declare @var nvarchar(max) = ''''

select @var =''SELECT @body = ('' + CAST(''SELECT td = R1, '''''''', td = R2 from ('' + @tmpSql + '') t FOR XML PATH(''''tr'''')'' as nvarchar(max)) + '')''

exec sp_executesql @var, N''@body nvarchar(max) OUTPUT'', @body output'

--select @cmd

exec sp_executesql @cmd, N'@body nvarchar(max) output', @EmailBody output

select @EmailBody = '

'

exec msdb..sp_send_dbmail

@recipients = @EmailTo,

@copy_recipients = @EmailCc,

@subject = @EmailSubjct,

@body = @EmailBody,

@body_format = 'HTML',

@file_attachments = @EmailAttachments,

@mailitem_id = @id output

END TRY

BEGIN CATCH

select @id = -1

END CATCH

RETURN @id

END

So here is my notes, The basic concept is like this:

1. I want to have a sp which can accept a query statement, wrap my query result, and send through in the email body right away. I am targeting single row query result because to me, most multi row result sets need to be handled separately. But single row result is just like a short and quick notification.

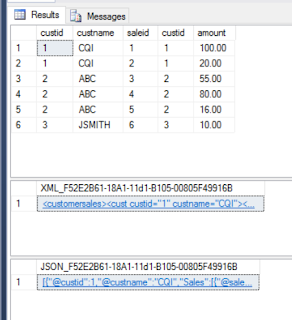

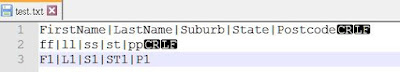

2. Now I know I am looking at single row result set. But I have no idea how many columns a query can return. So the eaist way is turning the result set 90 degrees to become a vertical two column result set, the first column is the original column header, and the second column is the row contents.

3. To read the column titles from the result set, I load the query result into a temp table by calling a dynamic query:

select @cmd += 'select * INTO #Tmp FROM ( ' + @query + ') tmp;' + CHAR(10) + char(13)

4. Now think in this way, from the temp table, I can get a two-row result set: the first row is the column titles of the temp table, and the second row is the original data row. It is just a simple information schema query, and an union statement. So for the temp table, we can simple write down

select * from sys.columns where object_id = object_id('tempdb.dbo.#Tmp')

union all

select * from tempdb.dbo.#Tmp

5. Once we have the two row result set, we can then use a for XML clause to generate html email. Something just like:

SELECT @body = (SELECT td = R1, '', td = R2 from (select * from myTable) t FOR XML PATH('tr'))

6. Everything looks pretty simple, isnt it? Hold on for one second. Well, the difficult part of the procedure is that, we have to get the dynamic result set within a dynamic execution context. Get confused? Think about it, starts from step 4, we transform the resultset by referring #Tmp. But this temp table was generated by a dynamic statement in step 3. So to make temp table context available to the following execution steps, we have to wrap the procedure "sp_executesql" within the dynamic statement. This is the reason you can see two "sp_executesql" calls within my procedure.

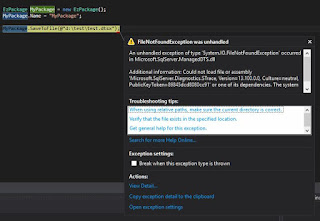

7. the last point, you might have noticed, I named my procedure "sp_xxx", not a good practice as this pattern leads to bad practice. But in my case, I want to have this sp available to all my databases, so I registered this procedure as a system procedure by calling

sp_ms_marksystemobject 'sp_SendVerticalHTMLEmail'

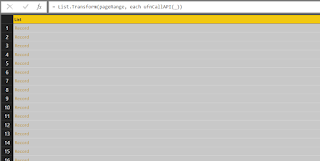

So now we can have a test

and the 2nd test

Enjoy it :)